Read the full story

Foundation brings unique insights on business, building product, driving growth, and accelerating your career — from CEOs, founders and insiders.

A/B Tests Rarely Make Sense: How and When to Use Them

You can't A/B test an invention.

Apple didn't A/B test the iPod or the iPhone. Tony Fadell, creator of the iPod and iPhone, didn't A/B test the Nest thermostat when he launched a revolutionary home thermostat after leaving Apple. OpenAI could not A/B test ChatGPT before launching it.

An A/B test—testing one variation of a product against another to determine which resonates more with customers—cannot be used to invent new things. How can you test something that doesn't exist against a variation of itself? And yet, the A/B test has become a ubiquitous crutch at corporations of all sizes.

When teams don't have a decisive leader, people say things like, "let's test it to see what users want," or "we're a data driven organization, so let's rely on a test to decide." This thinking is a thinly veiled disguise for decision by committee. It leads to marginal decisions and small changes. Committees distribute responsibility, and the buck stops with no one. Mediocrity follows.

X (Twitter)

Can you test laying off 80% of a company?

When Elon Musk purchased X (formerly Twitter), he had a problem: the company was quickly burning cash—his cash.

Musk solved this by drastically reducing costs, firing 80% of the workforce. Prior management, the media, and even X's own users at the time were sure that the company needed most of its 7,500 employees to function. Musk could not A/B test laying off a large portion of the workforce. Instead, he relied on judgment gained from running multiple companies with thousands of employees to estimate that X needed a lot fewer workers.

He did what Jeff Bezos made famous at Amazon: disagree and commit. He made an unpopular decision to slash headcount, which would be impossible to undo. While big, untestable decisions certainly lead to mistakes, they avoid stagnation. Musk's alternative was preserving what was, which would have led to bankruptcy for a company that had not made a profit in years. Today, X has approximately 1,500 employees and is functioning well.

When should you run an A/B test?

An A/B test only works if you start with an opinionated hypothesis, have enough data, and can test an isolated feature.

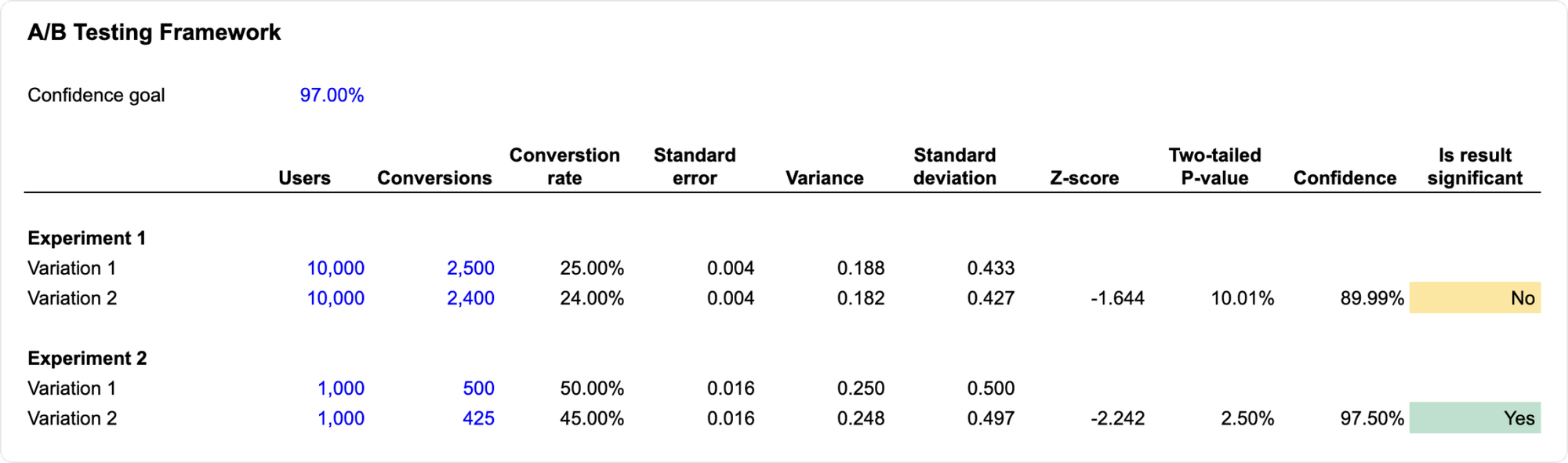

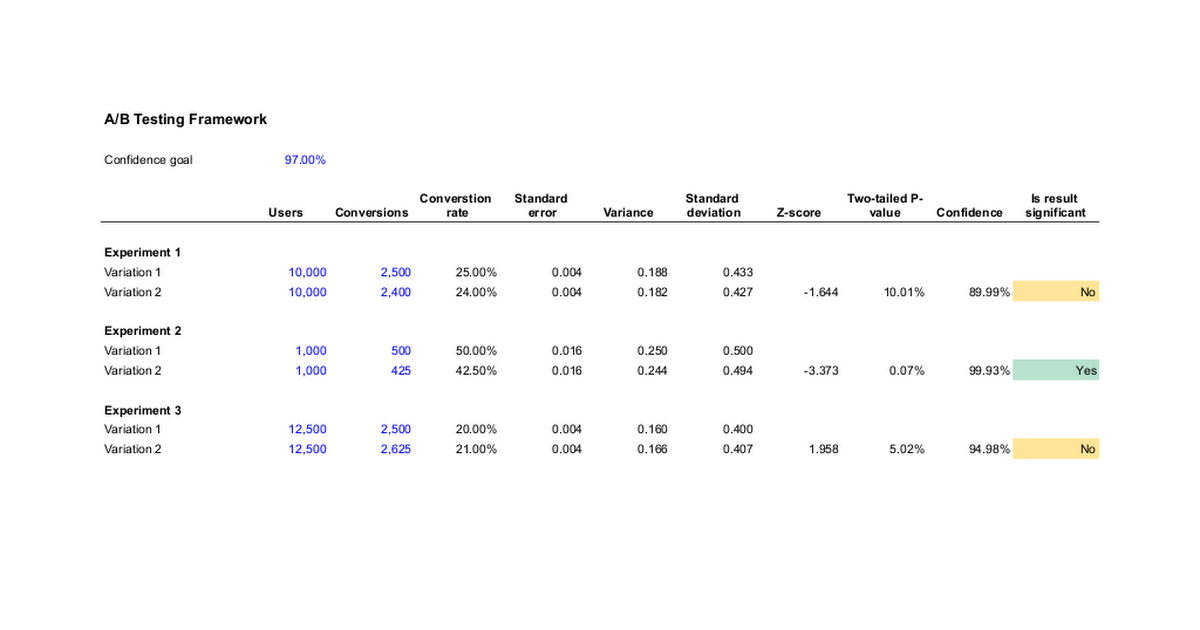

Below is a link to the A/B testing template I use to run every A/B test. But before running a test, you must make sure that three things are true: (i) you have an opinionated hypothesis, (ii) you can collect enough data, and (iii) you're testing an isolated feature.

Opinionated hypothesis

You need taste and intuition to decide what to build. Blindly trusting the result of an A/B test is a mistake. For example, if you test removing an in-app option to cancel a subscription, customer churn will obviously fall. But removing the ability to cancel is an awful user experience and is also illegal.

If you replace a black button with a red button, the red one will almost always get more clicks. Do that enough times, and an app will become an unusable cacophony of color, and red will become meaningless. Trusting an unopinionated A/B test drives short-term gain while hurting long-term usability.

Karri Saarinen, the CEO of Linear, a popular task management app, complains about the effect of A/B tests on product experience. The result of his posture is an application with a user experience far superior to any other task management or bug tracking software.

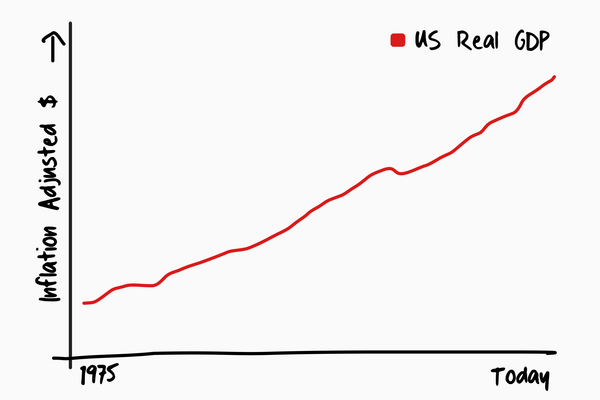

Enough data

You need enough data to measure a statistically significant result. For example, if you are trying to make a 5% improvement in conversion from 20% to 21%, you need 25,000 users to try a feature in order to trust the result with 95% confidence! To trust it with 90% confidence, 18,000 users must try the feature. See experiment 3 in the template above. This means that if you run this test on fewer than 18,000 users, it will provide an incorrect result one out of ten times.

The most common error in trusting an A/B test is ignoring statistics. Few products have enough users to even consider running a meaningful A/B test.

Test an isolated feature

You can only test an isolated feature within a larger product.

One such test is charging a subscription fee to all customers, which we ran at Albert, the banking app I run. We had long believed that customers who chose not to pay a subscription fee for paywalled features would never pay a subscription fee for any part of the app. In a bid to transform Albert's business model by raising revenue per active user, we ran an A/B test that diverted a portion of new signups to an app entirely paywalled by a subscription fee.

With subscription required, conversion to paid subscription doubled, but surprisingly the percentage of users active two months after joining stayed the same. Previously non-paying customers were now all paying for the app. We learned that the product provided enough value that all customers were willing to pay for it.

Use judgment

Read the full story

Foundation brings unique insights on business, building product, driving growth, and accelerating your career — from CEOs, founders and insiders.